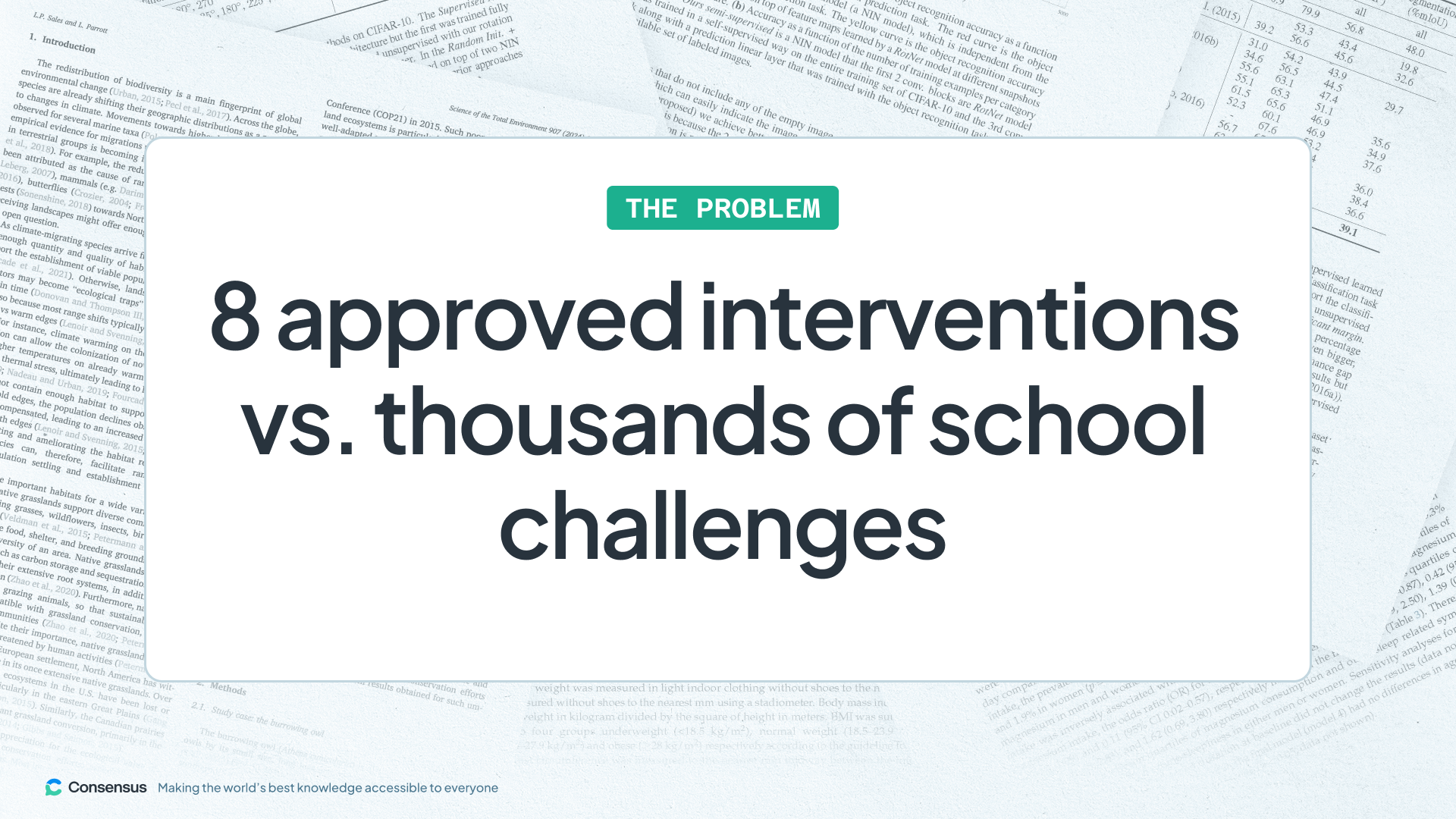

Federal law requires schools to use evidence-based interventions, but only 8 are officially approved for thousands of challenges. A pilot study run by Dr. Seth Hunter found that aspiring principals using Consensus were statistically significantly more likely to find research useful and easy to use compared to traditional methods like Google Scholar; suggesting AI could finally bridge the gap between academic research and school practice.

The Evidence Gap

Every year, thousands of education studies are published with the potential to improve schools. Yet principals and policymakers rarely use this evidence. Without tools or training to find and interpret research, they rely on generic interventions that check compliance boxes but don’t help students. Progress stalls, and children miss out on solutions that could change their lives.

The Scale of the Gap is Staggering

Since 2002, when the No Child Left Behind Act took effect, federal law has required underperforming schools to use “evidence-based” interventions backed by rigorous, peer-reviewed research. Yet in practice, only eight interventions have cleared federal review and are readily accessible to school leaders, leaving them with far too few options for the thousands of challenges they face. With so few choices, principals often pick from the list simply to stay in compliance, not because the options match their schools’ needs.

Take declining student attendance. Schools send automated text reminders to parents when the real issue might be bullying that requires counseling. Math score declines trigger sweeping reforms when the actual problem is vocabulary gaps affecting specific student groups. The intervention fails, but compliance boxes get checked.

Testing Consensus as a Bridge

Hunter wondered if AI could break this cycle.

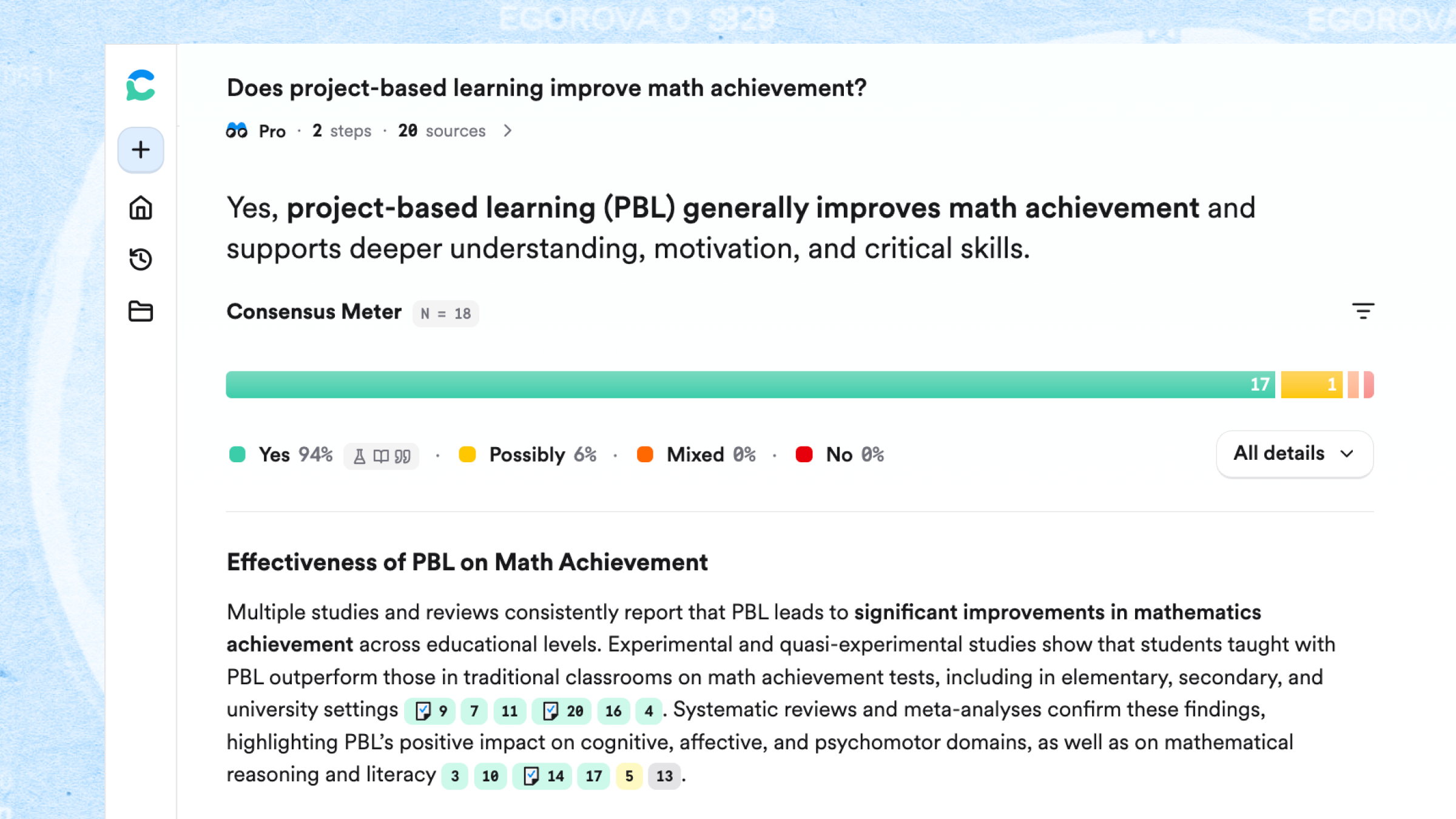

To find out, he designed a pilot study with 40 aspiring principals in Mason’s Education Leadership MEd program, randomly assigning half to use an AI-enabled workflow built around Consensus, while the control group relied on traditional methods like Google Scholar and federal repositories. Hunter chose Consensus because it addressed two critical problems: its filters help surface rigorous studies that meet federal requirements, and its clear summaries make intimidating academic articles accessible to busy school leaders.

Transformative Results

The results were striking. Students using the Consensus-based workflow said it was significantly easier to find and understand rigorous research. On surveys, they were statistically significantly more likely to agree the tools would be useful in their future roles as education leaders and that the tools were easy to use (effect sizes +0.16 and +0.17 SD, respectively). Even more importantly, for the first time since 2018, Hunter’s pre-service principals told him they could see themselves applying this caliber of research in schools. For him, that was a breakthrough.

These findings suggest that AI-enabled tools like Consensus could meaningfully shrink the gap between technical academic research and K-12 research use. Hunter envisions the potential extending far beyond compliance: math coaches could have rigorous research “in their back pocket” to help teachers improve vocabulary instruction for specific student populations, or principals could quickly find evidence-based strategies for emerging challenges.

Scaling to Schools

Hunter is careful to note the study’s limitations. It was a classroom pilot based on self-reports, not causal evidence of impact on actual plan quality or student outcomes. Still, the early signals are promising. His team is now partnering with the Virginia Department of Education to test the workflow with practicing principals and district leaders. They plan to use large language models to systematically analyze school improvement plans statewide. The goal is to compare the quality and relevance of interventions chosen by districts using the AI workflow with those chosen by districts relying on traditional methods. This will provide the first rigorous assessment of whether AI tools can actually improve educational decision-making at scale.

Hunter’s work represents only the beginning of what is possible when AI helps translate rigorous research into practical solutions. As more educators gain access to these tools, evidence-based decision making may finally become the norm rather than the exception in schools. The question is not whether AI can help, but how quickly we can scale these approaches to reach the students who need them most.

If you are using Consensus in education or in another field where research translation matters, we would like to hear from you. Contact us at [email protected] to share how AI-powered research tools are changing the way you work.

Disclaimer: This article describes a classroom pilot led by Dr. Seth Hunter. The views expressed are his own and do not represent George Mason University.

Thema’s co-authored work entitled: “Social Networks and Entrepreneurial Outcomes of Women Founders: An Intersectional Perspective” received the

Thema’s co-authored work entitled: “Social Networks and Entrepreneurial Outcomes of Women Founders: An Intersectional Perspective” received the  Craig in the news:

Craig in the news: